MGIT Benchmark: Dataset

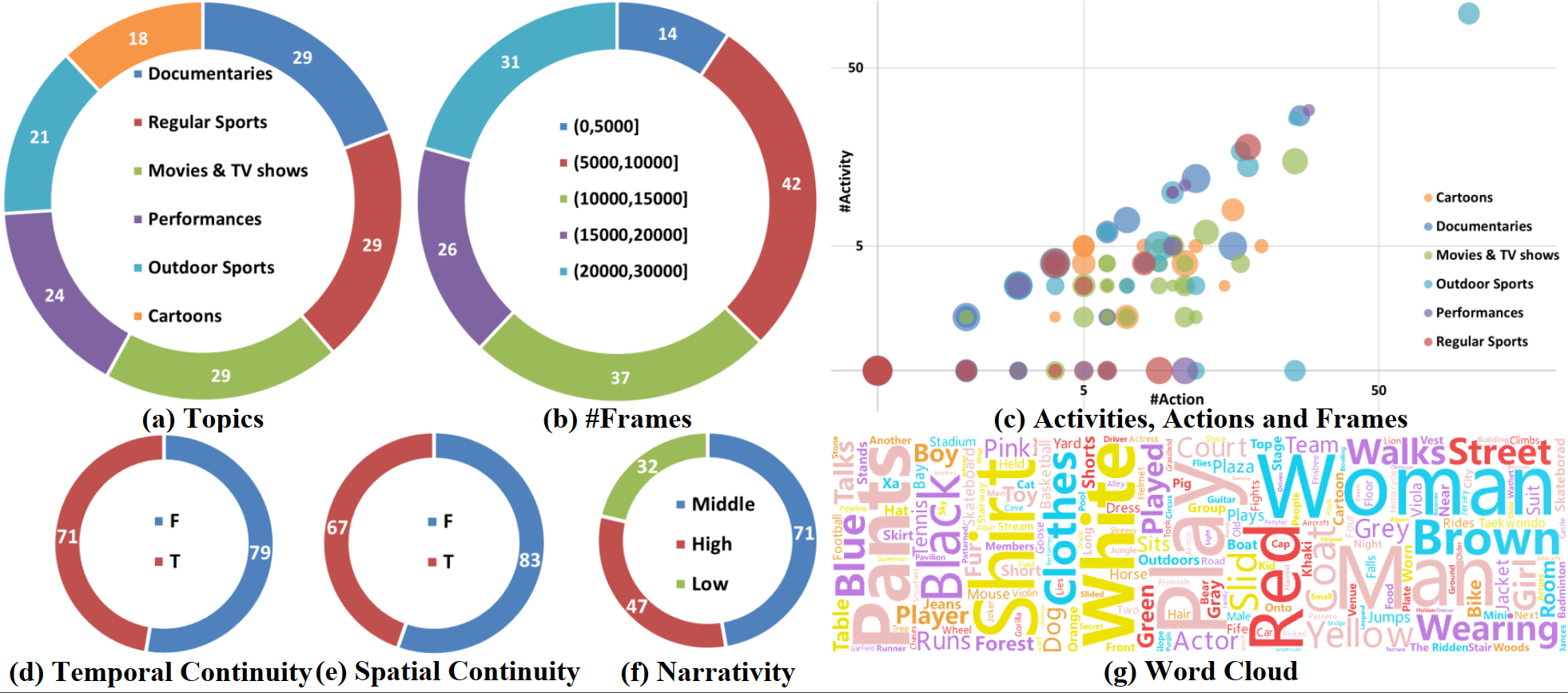

We first propose a new multi-modal global instance tracking benchmark named MGIT. It consists of 150 long-term videos with 2.03M frames that sufficiently covers the complex spatio-temporal causal relationships of long videos, with an average length of 13500 frames.

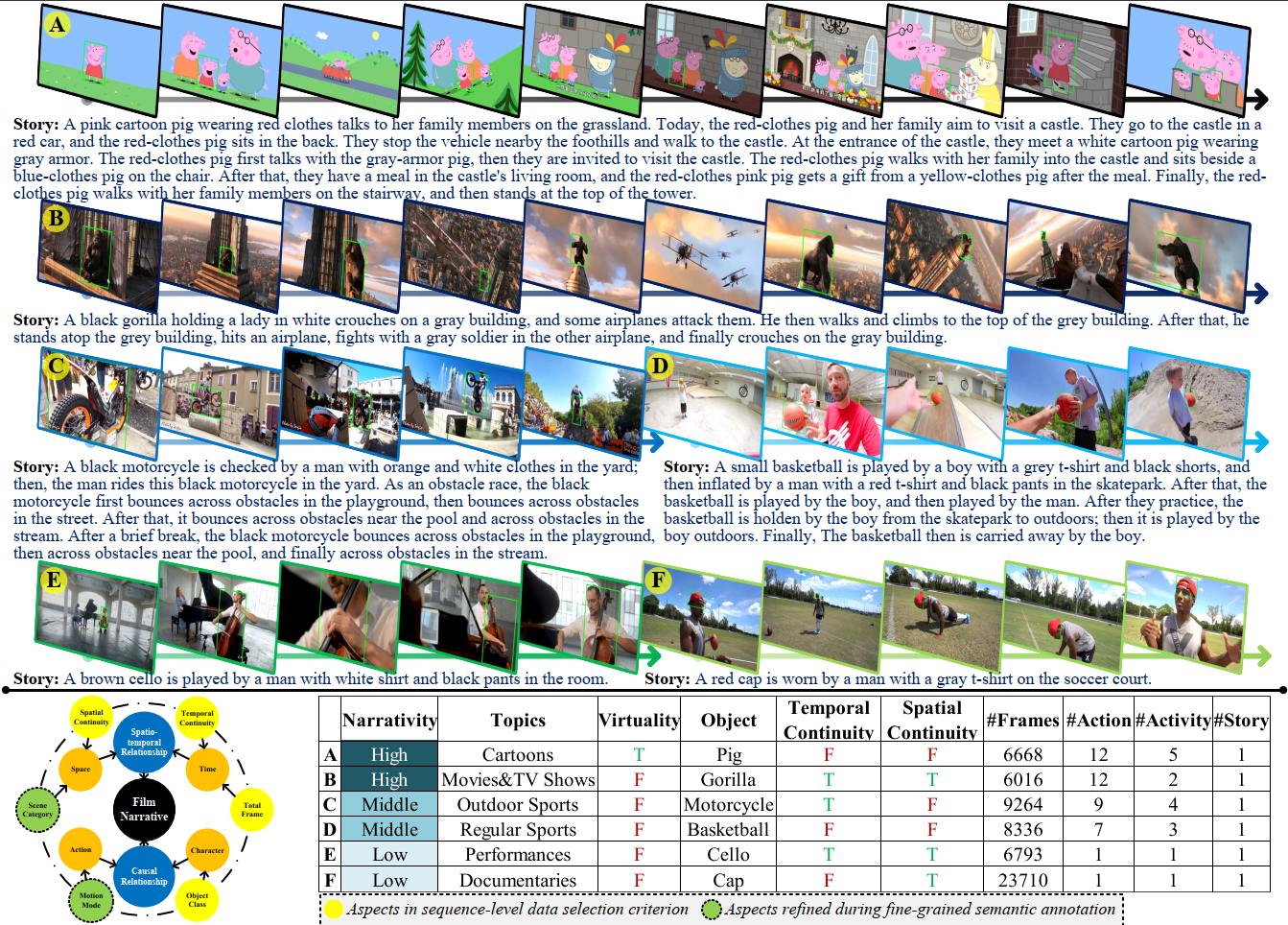

Data Collection

MGIT follows the recently proposed large-scale benchmark VideoCube to conduct the data collection. VideoCube refers to the film narrative (i.e., a chain of causal relationship events occurring in space and time) and proposes the 6D principle for benchmark construction. In this work, we divide the 6D principle into two parts. Four dimensions (i.e., object class, spatial continuity, temporal continuity, and total frame), together with narrativity and topic, form the new sequence-level selection criterion. The other two dimensions (i.e., motion mode and scene category) will be refined during fine-grained semantic annotation.

MGIT Benchmark: Annotation Strategy

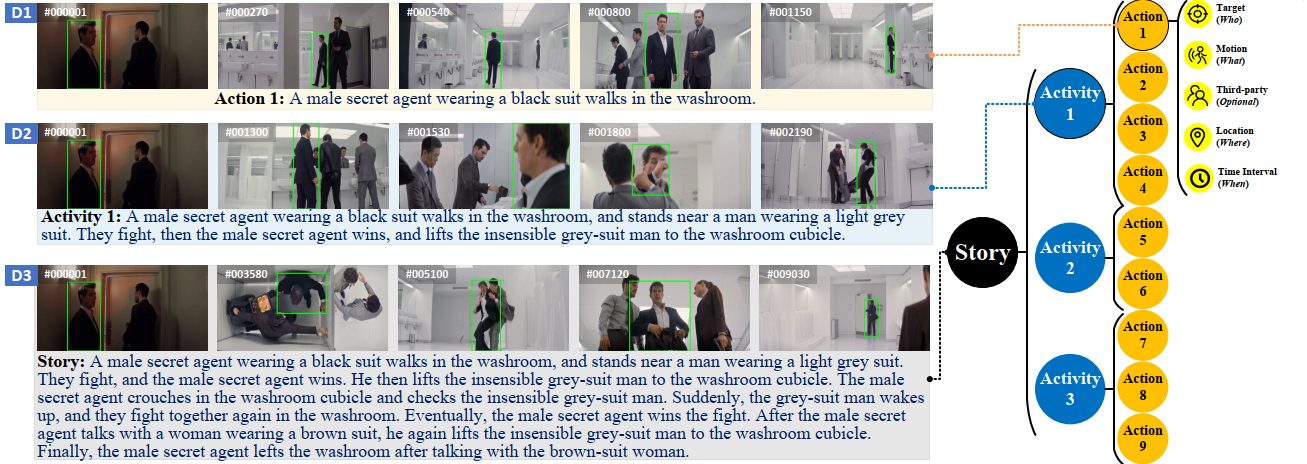

Hierarchical multi-granularity semantic annotation strategy

We design a hierarchical multi-granular semantic annotation strategy to provide scientific natural language information. Video content is annotated by three grands (i.e., action, activity, and story).

Action determines annotation dimensions from both natural language grammar structure and video narrative content. We use the following critical narrative elements to portray an action: tracking target (who), motion (what) and third-party object (if present), location (where), and time interval (when).

Activity uses causality as a basis for classification. An action describes what happens in a short period, while an activity can be seen as a collection of actions with clear causal relationships. A new activity is usually accompanied by a scene switch or an explicit change of the third-party object.

Story is a high-level description to enhance temporal and causal relationships, guiding words such as "first, then, after that, finally," can be used on the basis of actions and activities.

High-quality annotation

Based on the the multi-granular annotation strategy, we construct MGIT and provide high-quality annotation with 2.03 million frames, and provide detailed annotation with 150 stories, 621 activities, and 982 actions. The semantic descriptions contain 77,652 words with 921 non-repetitive words.

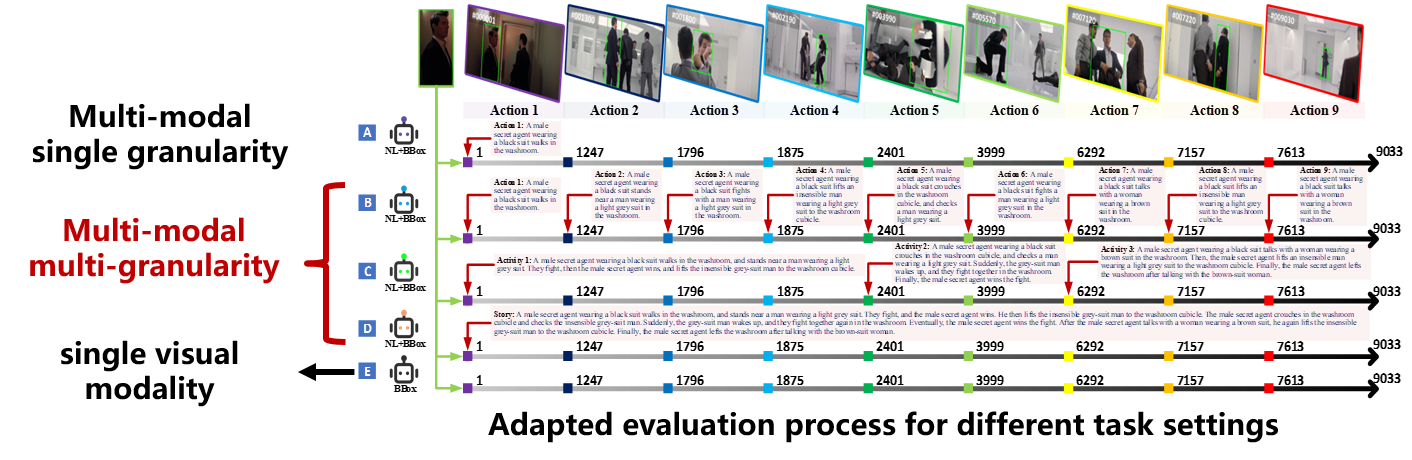

MGIT Benchmark: Evaluation mechanism

We Expand the evaluation mechanism by conducting experiments under both traditional evaluation mechanisms (multi-modal single granularity, single visual modality) and evaluation mechanisms adapted to this work (multi-modal multi-granularity).

Mechanism A: We only allow trackers to use the semantic information of the first action in this experiment.

Mechanism B - D: Both visual information (BBox of the first frame) and semantic information (natural language description) can be used for multi-modal trackers.

Mechanism E: We only allow trackers to use the visual information (BBox of the first frame).